Choose Your Transformer & Transformer Ranker

The problem:There are too many pre-trained language models (LMs) out there. But which one of them is best for your NLP classification task? Since fine-tuning LMs is costly, it is not possible to try them all!

The solution: Transferability estimation with TransformerRanker!

TransformerRanker is a library that

-

quickly finds the best-suited language model for a given NLP classification task. All you need to do is to select a dataset and a list of pre-trained language models (LMs) from the 🤗 HuggingFace Hub. TransformerRanker will quickly estimate which of these LMs will perform best on the given task!

-

efficiently performs layerwise analysis of LMs. Transformer LMs have many layers. Use TransformerRanker to identify which intermediate layer is best-suited for a downstream task!!

Abstract (Approach Paper)

There currently exists a multitude of pre-trained transformer language models (LMs) that are readily available. From a practical perspective, this raises the question of which pre-trained LM will perform best if fine-tuned for a specific downstream NLP task. However, exhaustively fine-tuning all available LMs to determine the best-fitting model is computationally infeasible. To address this problem, we present an approach that inexpensively estimates a ranking of the expected performance of a given set of candidate LMs for a given task. Following a layer-wise representation analysis, we extend existing approaches such as H-score and LogME by aggregating representations across all layers of the transformer model. We present an extensive analysis of 20 transformer LMs, 6 downstream NLP tasks, and various estimators (linear probing, kNN, H-score, and LogME). Our evaluation finds that averaging the layer representations significantly improves the Pearson correlation coefficient between the true model ranks and the estimate, increasing from 0.58 to 0.86 for LogME and from 0.65 to 0.88 for H-score.

BEAR & LM Pub Quiz

Knowledge probing assesses to which degree a language model (LM) has successfully learned relational knowledge during pre-training. Probing is an inexpensive way to compare LMs of different sizes and training configurations. However, previous approaches rely on the objective function used in pre-training LMs and are thus applicable only to masked or causal LMs. As a result, comparing different types of LMs becomes impossible. To address this, we propose an approach that uses an LM's inherent ability to estimate the log-likelihood of any given textual statement. We carefully design an evaluation dataset of 7,731 instances (40,916 in a larger variant) from which we produce alternative statements for each relational fact, one of which is correct. We then evaluate whether an LM correctly assigns the highest log-likelihood to the correct statement. Our experimental evaluation of 22 common LMs shows that our proposed framework, BEAR, can effectively probe for knowledge across different LM types. We release the BEAR datasets and an open-source framework that implements the probing approach to the research community to facilitate the evaluation and development of LMs.

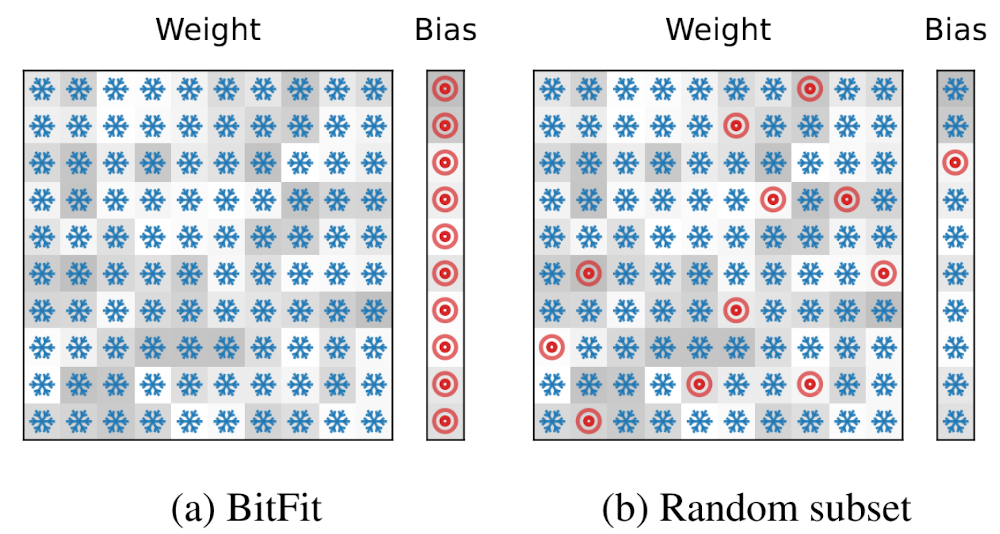

Parameter-Efficient Fine-Tuning: Is There An Optimal Subset of Parameters to Tune?

The ever-growing size of pretrained language models (PLM) presents a significant challenge for efficiently fine-tuning and deploying these models for diverse sets of tasks within memory-constrained environments.In light of this, recent research has illuminated the possibility of selectively updating only a small subset of a model’s parameters during the fine-tuning process.Since no new parameters or modules are added, these methods retain the inference speed of the original model and come at no additional computational cost. However, an open question pertains to which subset of parameters should best be tuned to maximize task performance and generalizability. To investigate, this paper presents comprehensive experiments covering a large spectrum of subset selection strategies. We comparatively evaluate their impact on model performance as well as the resulting model’s capability to generalize to different tasks.Surprisingly, we find that the gains achieved in performance by elaborate selection strategies are, at best, marginal when compared to the outcomes obtained by tuning a random selection of parameter subsets. Our experiments also indicate that selection-based tuning impairs generalizability to new tasks.

An Interactive Introduction to Model-Agnostic Meta-Learning

Interactive article explaining various meta-learning techniques

City Places Scraper

The scrapers get places (businesses, bars, cafés, etc.) from OSM and collects additional metadata including a time-specific popularity index. The scraper was originally written at powerplace.io for internal usage and later made public.